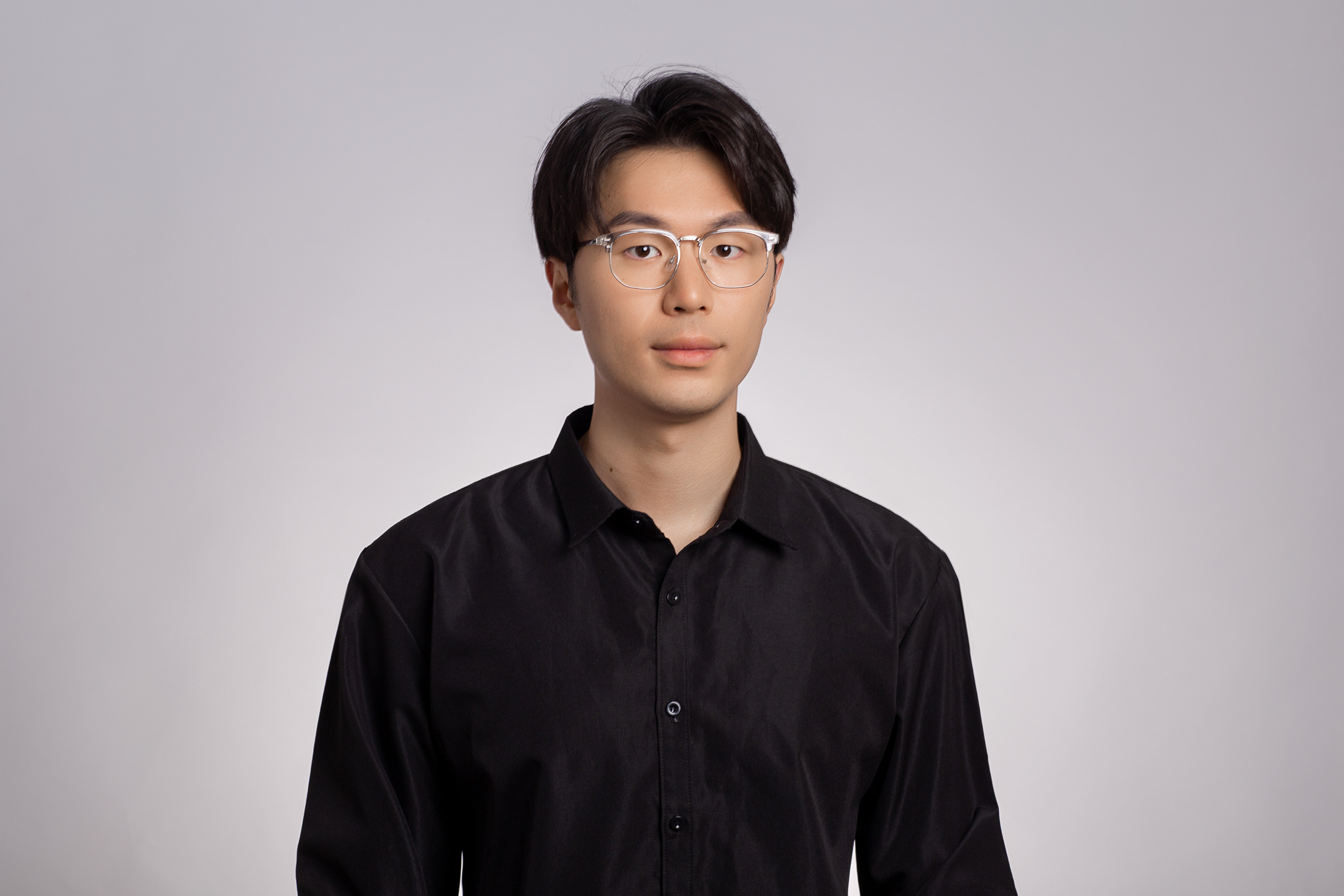

About Me

I am Ziheng Cheng, a second-year PhD student in Department of IEOR, UC Berkeley and fortunately supervised by Xin Guo. Prior to that, I got my B.S. degree in School of Mathematical Sciences, Peking University, supervised by Cheng Zhang. I was also very fortunate to have worked with Song Mei, Kun Yuan, Tengyu Ma. My research interests span broadly in statistics, optimization and machine learning, including multi-agent RL, language models and diffusion models, distributed optimization, sampling and variational inference. If you are interested in my research, please feel free to contact me.

News

- Sep, 2025 A new paper “Bridging Discrete and Continuous RL: Stable Deterministic Policy Gradient with Martingale Characterization” on Arxiv!

- Sep, 2025 A new paper “Data-Effient Training by Evolved Sampling” on Arxiv!

- Sep, 2025 Our paper “OVERT: A Benchmark for Over-Refusal Evaluation on Text-to-Image Models”, “Provable Sample-Efficient Transfer Learning Conditional Diffusion Models via Representation Learning” accepted at NeurIPS 2025!

- May, 2025 A new paper “OVERT: A Benchmark for Over-Refusal Evaluation on Text-to-Image Models” on Arxiv!

- Feb, 2025 A new paper “Provable Sample-Efficient Transfer Learning Conditional Diffusion Models via Representation Learning” on Arxiv!

- Jan, 2025 Our paper “Convergence of Distributed Adaptive Optimization with Local Updates” accepted at ICLR 2025!

- Oct, 2024 A new paper “Semi-Implicit Functional Gradient Flow” on Arxiv!

- Sep, 2024 Our paper “Functional Gradient Flows for Constrained Sampling” accepted at NeurIPS 2024!

- Sep, 2024 A new paper “Convergence of Distributed Adaptive Optimization with Local Updates” on Arxiv!

- May, 2024 Glad to serve as a reviewer of NeurIPS 2024 for the first time!

- May, 2024 Our paper “The Limits and Potentials of Local SGD for Distributed Heterogeneous Learning with Intermittent Communication” accepted at COLT 2024!

- May, 2024 Our paper “Kernel Semi-Implicit Variational Inference”, “Reflected Flow Matching” accepted at ICML 2024!

- Apr, 2024 Glad to be an incoming PhD student at UC Berkeley IEOR!

- Jan, 2024 Our paper “Momentum Benefits Non-IID Federated Learning Simply and Provably” accepted at ICLR 2024!

- Oct, 2023 Join Microsoft Research Asia as an intern!

- Sep, 2023 Our paper “Particle-based Variational Inference with Generalized Wasserstein Gradient Flow” accepted at NeurIPS 2023!

- Jun, 2023 Visit Tengyu Ma at Stanford!

- Jun, 2023 A new paper “Momentum Benefits Non-IID Federated Learning Simply and Provably” on Arxiv!

- May, 2023 A new paper “Joint Graph Learning and Model Fitting in Laplacian Regularized Stratified Models” on Arxiv!

Publications

(Preprint) Bridging Discrete and Continuous RL: Stable Deterministic Policy Gradient with Martingale Characterization

Ziheng Cheng, Xin Guo, Yufei Zhang

[Arxiv](Preprint) Data-Efficient Training by Evolved Sampling

Ziheng Cheng, Zhong Li, Jiang Bian

[Arxiv](NeurIPS 2025) OVERT: A Benchmark for Over-Refusal Evaluation on Text-to-Image Models

Ziheng Cheng*, Yixiao Huang*, Hui Xu, Somayeh Sojoudi, Xuandong Zhao, Dawn Song, Song Mei

[Arxiv](NeurIPS 2025) Provable Sample-Efficient Transfer Learning Conditional Diffusion Models via Representation Learning

Ziheng Cheng, Tianyu Xie, Shiyue Zhang, Cheng Zhang

[Arxiv](Preprint) Semi-Implicit Functional Gradient Flow

Shiyue Zhang*, Ziheng Cheng*, Cheng Zhang

[Arxiv](ICLR 2025) Convergence of Distributed Adaptive Optimization with Local Updates

Ziheng Cheng, Margalit Glasgow

[Arxiv](NeurIPS 2024) Functional Gradient Flows for Constrained Sampling

Shiyue Zhang*, Longlin Yu*, Ziheng Cheng*, Cheng Zhang

[Arxiv](COLT 2024) The Limits and Potentials of Local SGD for Distributed Heterogeneous Learning with Intermittent Communication

Kumar Kshitij Patel, Margalit Glasgow, Ali Zindari, Lingxiao Wang, Sebastian U Stich, Ziheng Cheng, Nirmit Joshi, Nathan Srebro

[Arxiv](ICML 2024) Kernel Semi-Implicit Variational Inference

Ziheng Cheng*, Longlin Yu*, Tianyu Xie, Shiyue Zhang, Cheng Zhang

[Arxiv](ICML 2024) Reflected Flow Matching

Tianyu Xie*, Yu Zhu*, Longlin Yu*, Tong Yang, Ziheng Cheng, Shiyue Zhang, Xiangyu Zhang, Cheng Zhang

[Arxiv](ICLR 2024) Momentum Benefits Non-IID Federated Learning Simply and Provably

Ziheng Cheng*, Xinmeng Huang*, Pengfei Wu, Kun Yuan

[Arxiv](NeurIPS 2023) Particle-based Variational Inference with Generalized Wasserstein Gradient Flow

Ziheng Cheng*, Shiyue Zhang*, Longlin Yu, Cheng Zhang

[Arxiv](Preprint) Joint Graph Learning and Model Fitting in Laplacian Regularized Stratified Models

Ziheng Cheng*, Junzi Zhang*, Akshay Agrawal, Stephen Boyd

[Arxiv]

Experiences

Peking University

Undergrad Research (advisor: Prof. Cheng Zhang, School of Mathematical Sciences)

May. 2022 – PresentStanford University

Summer Research (advisor: Prof. Tengyu Ma, Department of Computer Science)

Jun. 2023 – Oct. 2023Peking University

Undergrad Research (advisor: Prof. Kun Yuan, Center for Machine Learning Research)

Mar. 2023 – Sept. 2023Stanford University

Remote Research (advisor: Prof. Stephen Boyd, Department of Electrical Engineering)

Oct. 2022 – May. 2023